4 Crucial Factors for Evaluating Large Language Models in Industry Applications

Every use case is different — depending on customer needs, and industry-specific guidelines. Learn how to make the right LLM choices, using 4 key rubrics

Over the past few months, I’ve had the opportunity to chat with folks from the legal, healthcare, finance, tech, insurance industries on LLM adoption. And each of them comes with unique requirements and challenges. In healthcare, for example — privacy is king. In finance, getting the numbers right is paramount. Lawyers want specialized, fine-tuned models for tasks like drafting legal documents.

In this article I’m going through the key decision factors that help you choose the right model for your particular case.

Response Quality

As Satya Nadella stated in his 2023 Keynote at Microsoft Inspire, there are 2 main paradigm shifts Generative AI introduces:

A more natural language computer interface

A reasoning engine, that sits on top of all your custom documents

Response quality is extremely important in both of these use categories. Our interface with computers has been getting closer and closer to natural language (think of how much more friendly Python is compared with C++ or how much more friendly C++ is, compared to machine language). However, the reliability of these programming languages have never really been an issue — if there is an issue, we call it a programming bug, and attribute it to humans making errors. However, the more natural interface from LLMs creates a new problem, where LLMs are known to hallucinate or give wrong answers, and so a new type of “AI bug” gets introduced. Thus, response quality, becomes extremely important.

The same is with the 2nd use case. While we are all comfortable using Google search, behind the scenes Google is using vector embeddings and other matching techniques, to figure out which page most likely contains an answer to a question you ask. If the page lists wrong results — that again is a human error, due to humans listing incorrect information. However, LLMs again introduce the possibility that answers generated are more customized to your question, but possibly wrong.

Recently, there have been many new open-source LLM advances like Llama2, Falcon, etc. Recently there was a challenging multi-bench benchmark introduced a method to use strong LLMs as judges to evaluate other LLMs on more open-ended questions. As you can see below, they have various types of performance based on the tasks. It’s worth taking a look at various models — depending on whether your organization is looking at customized chatbots, or extracting information from text, generating custom code, creative writing, etc.

LLM Economics

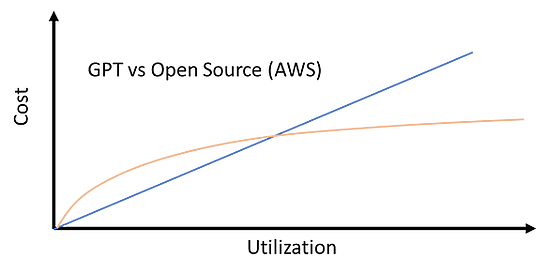

How expensive various LLM solutions are is a function of the type of use-case you have. I go through this in detail here.

TL;DR: For lower usage in the 1000s of requests per day range, ChatGPT works out cheaper than using open-sourced LLMs deployed to AWS. For millions of requests per day, open-sourced models deployed in AWS work out cheaper.

Latency

If you are serving real-time requests e.g. a customer facing chatbot, then latency is important. Maybe as much (or more) as response quality. Let’s look at the latency for various OpenAI models: GPT-3/3.5/4. Multiple benchmarks have shown that GPT-4 has superior performance as compared to GPT-3.5 (ChatGPT), which in-turn was a game-changing revolution over GPT-3.

Let’s say as a creative agency, you would like to use LLM models to give entrepreneurs creative suggestions — say writing a tagline for an ice-cream shop venture. I’ve written some sample code below to generate this tagline from the three GPT models:

#GPT-3

import time

t0=time.time()

response_gpt3 = openai.Completion.create(

model="text-davinci-003",

prompt="Write a tagline for an ice cream shop."

)

t1=time.time()

gpt_3_time=t1-t0

#GPT-3.5

import time

t0=time.time()

response_gpt3p5=openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "Write a tagline for an ice cream shop."}

]

)

t1=time.time()

gpt_3p5_time=t1-t0

#GPT-4

import time

t0=time.time()

response_gpt4=openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": "Write a tagline for an ice cream shop."}

]

)

t1=time.time()

gpt_4_time=t1-t0The responses are below. Personally, I prefer the GPT-4 output, but the others are not too bad.

"Cool off with all the flavor you can imagine!" ---- GPT 3

"Scoop up happiness, one flavor at a time!" ---- GPT 3.5

"Scooping Happiness One Cone at a Time" ---- GPT 4Now, let’s look at the latency. Surprisingly, GPT-3.5 returns the fastest result (faster than GPT-3), and GPT-4 is the slowest, at 1.4X slower than GPT-3.5.

print(gpt_3_time,gpt_3p5_time,gpt_4_time)

0.8402369022369385 0.7697513103485107 1.0829169750213623If the results are viewed as similar based on industry specific metrics, and a 1.4X slowdown is unacceptable, then GPT-3.5 is the superior choice, compared with GPT-4. But maybe latency is not a big issue — say users are emailed a custom report instead of seeing responses in real-time, and quality of responses is more important. In this scenario, GPT-4 could be the preferred option.

Privacy and Security

Many folks from the healthcare industry are super interested in LLMs and game-changing innovations in pin-pointing detailed medical information from troves of data. However, the same folks are also extremely concerned about privacy. They don’t like the idea of calling the same API that is used by everyone else — on private data. Plus, there might be severe repercussions by sharing private individual health data to an API like ChatGPT.

There are two other options — one is hosting open-source LLMs on cloud providers like AWS, Azure, or Google Cloud. Cloud providers have a long history of complying with healthcare guidelines and laws, such as the Health Insurance Portability and Accountability Act (HIPAA). AWS for example has a dedicated whitepaper on architecting applications on AWS for HIPAA compliance. I’ve also written some blogs on how to host LLMs as APIs on private cloud instances in AWS and Azure.

The second, more private option — is to deploy LLMs on-premises, on privately owned servers. Unlike hosting on the cloud or using a closed-source API like ChatGPT, deploying on-premises would require companies to invest in their own private data centers and teams to manage these data centers.

Notice in the above paragraph I mentioned that on-premises LLM hosting is the more private option, but I didn’t talk about security. This is because both have their own security strengths and weaknesses. The benefit of using the cloud, is that cloud providers have strict security standards. Also, cloud-based applications are typically more resilient due to providers having redundant data centers, at multiple locations. But there is the risk that malicious actors who exploit cloud systems could now get access to client data downstream.

On the other hand, on-premises data centers are more physically secure — if close at hand to the place of work. However, these need to be managed well, and without the right team, security vulnerabilities may abound. Also, a single on-premises center represents a single point of failure, with a lack of backup.

Takeaways

Choosing the “right” LLM is heavily application- and industry-driven. I’ve broken down this choice into 4 key factors — Quality, Price, Latency, and Privacy/Security. While you start incorporating LLMs into your workflows, this can be a good rubric to make decisions on which LLMs to get started off with. Note that however, this might change in the future.

New LLMs may come by that offer far superior performance at a lower cost. Also, data requirements might change. Initially you might estimate customers asking ~1k requests a day, but this might change to ~10k in the future, in which case using a closed-source API that charges by usage might not be as economic as a privately hosted open-sourced API with minimal usage costs.

But if you are facing a chicken-egg situation where you don’t want to get started because you don’t know which model to use, I would suggest not spending too much time getting stuck in analysis-paralysis. Andrej Karpathy (ex-Director of AI at Tesla, and co-founder at OpenAI) offers some good advice on this topic at his State of GPT talk. He said the best way to get started is by using an off-the-shelf API like ChatGPT and getting it to perform better through prompt engineering/retrieval augmented generation (which I have written an article about).

If this doesn’t work — try fine-tuning open-source models on your data (or training from scratch if you have the resources and bravery!). I would echo this sentiment for showcasing or exploring the potential applications of LLMs in your industry. Even if you know that ChatGPT is probably not right for your application, you can test it out on representative sample data. Once you get a sense of the possibilities to explore, you can swap out your LLM. That way, you can get started with a proof-of-concept, impress stakeholders, and make a difference!

I hope this helps on your journey of applying LLMs to create awesome products, and I look forward to hearing all about it in the comments!

If you like this post, follow me — I write on Generative AI in real-world applications and, more generally, on the intersections between data and society.

Feel free to connect with me on LinkedIn!

Here are some related articles:

When Should You Fine-Tune LLMs?

LLM Economics: ChatGPT vs Open-Source

Deploying Open-Source LLMs As APIs

How Do You Build A ChatGPT-Powered App?

Extractive vs Generative Q&A — Which is better for your business?

Fine-Tune Transformer Models For Question Answering On Custom Data